Lately I've been fascinated by the idea of what computer-generated creativity could look like. In this post I want to explore five different techniques for style transfer and implement them using Tensorflow.

We're going to examine the following methods:

- Basic Style Transfer (as described in Neural Artistic Style)

- Patch based Style Transfer (as described in Semantic Style Transfer)

- Doodle to Fine Art (as described in Semantic Style Transfer)

- Region-based Style Transfer

- Gradient mask-based Style Transfer

Note: All code for the examples below can be found here. To run it, you'll need pre-trained parameters of the VGG19 model in your local directory. A link to vgg19.npy can be found here.

A Simple Explanation of Style Transfer

"A Neural Algorithm of Artistic Style" introduced the foundational research where we can calculate a numeric representation of an image's style using outputs of layers in an image classification CNN. Now given 2 images, we can not only calculate their respective styles but also calculate their difference. We refer to this difference as style loss. By backpropagating style loss to, say, Image 1, we cause it to take on the style of Image 2. However, if ran for too many iterations, Image 1 just becomes a copy of Image 2 because that will minimize the style loss. To prevent this from happening, we also calculate the difference between the "stylized" Image 1 and what it originally looked like. We refer to this difference as content loss. The combination of style and content loss means that the "stylized" image takes on the style of Image 2 while retaining the original content of Image 1.

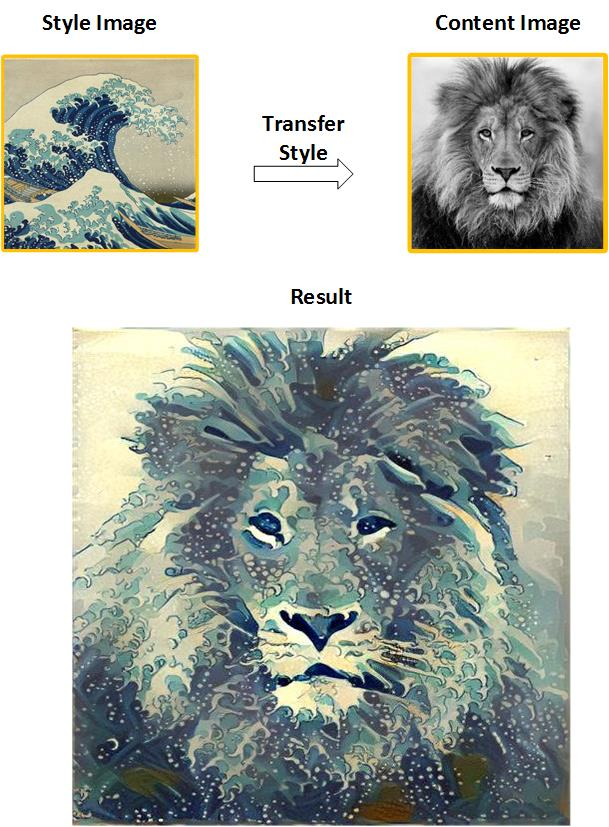

Basic Style Transfer

First, I sought to implement the foundational technique where the style of the whole style image is transferred to the whole content image.

When transferring style between images of vastly different dimensions, one suggestion is to normalize the style of each image by the number of pixels in that image. This normalization factor can be adjusted to control the "intensity" of the transferred style.

Patch-based Style Transfer

Next we have a patch based technique where the content image is split into patches, and for each patch we transfer the style from a patch (not necessarily same size) in the style image which is semantically similar (e.g. if a patch in content image contains a tree and the sky, we want to transfer the style of a patch from style image which which also contains a tree and the sky). As finding semantically similar patches using the images is difficult, we create simpler semantic maps instead.

When calculating the style of a patch, we can mask the image by making all pixels outside the patch 0. However, this means that we are doing a lot of unnecessary operations. So to avoid this, we extract the patch as a sub-image and perform the calculation.

Doodle to Fine Art

This method implements a variation of the patch based technique where there is no content image, only a content semantic map. This way when style transfer is performed, each patch essentially becomes a copy of the corresponding patch in style image.

Region based Style Transfer

Here is a region/mask based technique inspired by the patch based technique. Instead of transferring style between patches, we transfer style between entire regions instead. Regions can also act as a hard mask where style is only transferred to certain regions of the content image.

Again, when transferring style between regions of vastly different sizes, we suggest the style of each region is normalized by the number of pixels in that region.

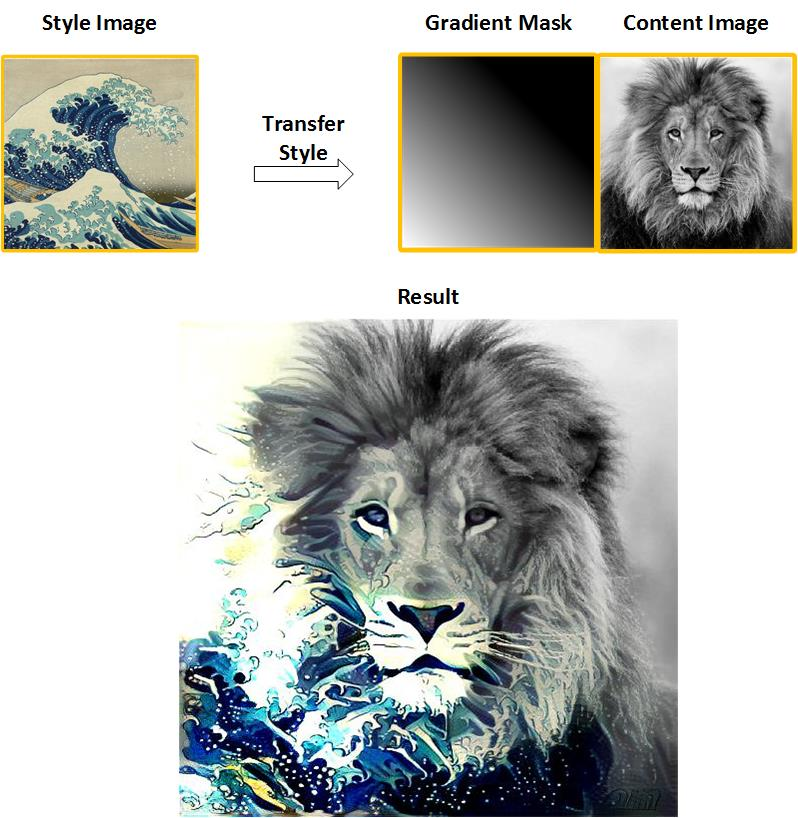

Gradient Mask based Style Transfer

Finally, we implement a gradient mask based technique inspired by the patch based technique. We observed that the previous patch/region based techniques are essentially multiplying all pixels outside the patch/region by 0, while multiplying the pixels inside the patch/region by 1. The gradient mask technique extends this by allowing for values between 0-1.

Because we are using the entire image in the style calculation instead of extracting a sub-image, the operation is expensive. We found that having even just a few gradient masks will result in performance and/or memory issues. Again, we suggest that the style of an image without a mask is normalized by the number of pixels, and the style of an image with a mask is normalized by the sum of the mask (assuming its values lie in range 0-1).